In the last few weeks I’ve been working with Purview doing some code to integrate Snowflake entities because there wasn’t an official connector. You can imagine my surprise when I’ve noticed last friday that there’s a new unannounced preview connector that does the work for us.

PyApacheAtlas

If you already read or watched something about the recommended way to create custom entities on Purview you have heard something about this library.

Here’s a link to the reference video Azure Purview REST API Deep Dive about the object model and most of the details you need to know before starting to work with the Purview API. Most of the information you find about the endpoints for Apache Atlas will also be valid for Purview.

Snowflake to Purview custom code

Here’s a sample based on the Redshift code available at the PyApacheAtlas repo if you wish to deep dive on how this stuff works or the new connector gives you less than what you want.

New connector

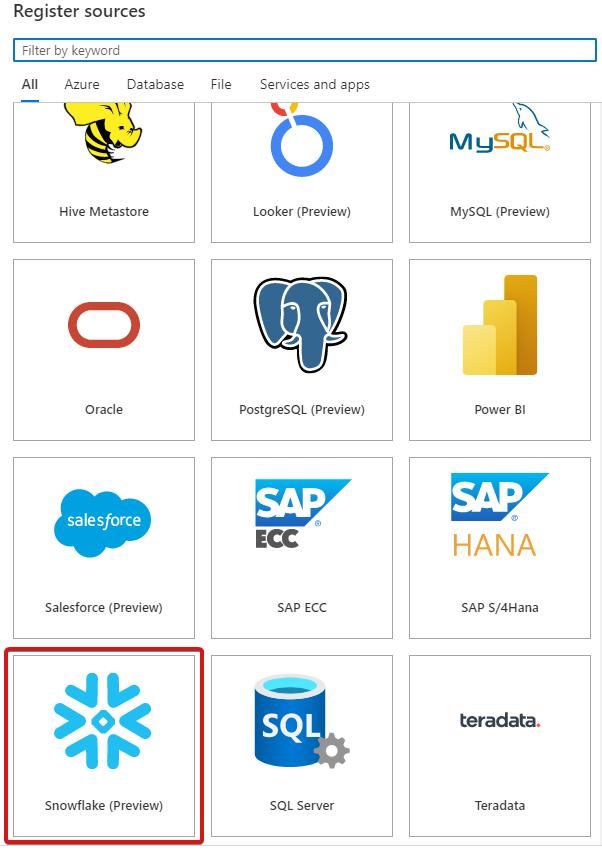

Going into your Purview instance you should be able to see the new connector:

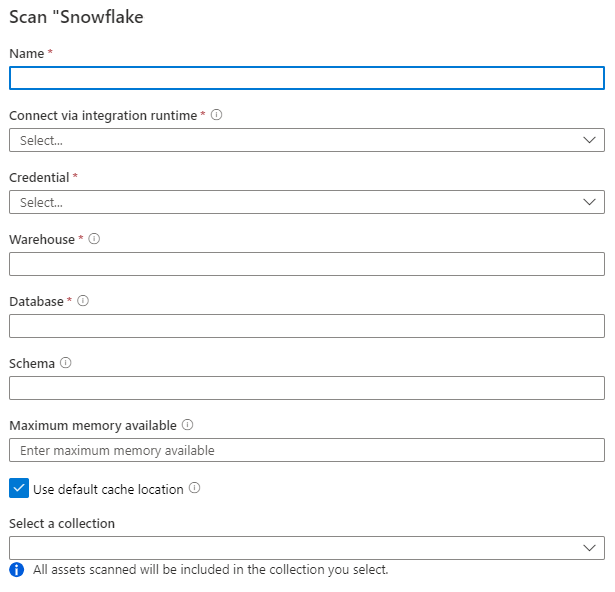

Here’s the list of requirements:

- Self-Hosted Integration Runtime with Java dependencies. See this for more details.

- Azure Key Vault to store the credentials of the Snowflake account.

Scheduling

At this point you would think that your work is done, but no. You will need to create schedules for each one of your schemas:

For me this is a no go, in the instance my team is administering there’s more than one hundred databases. We can’t simply waste hours without end configuring scans for each of the schemas inside those databases.

In the next days I will look into Scans - REST API to see if it can help us automate this process.

Summary

This new connector came just in time to help us avoid having to manage the code to pull the Snowflake objects to Purview. All that’s left to close this chapter is to automate the creation of the scans.

Next steps will be the creation of the code to build the Process objects linking our ELT pipelines to Snowflake and from there to Power BI by leveraging the PyApacheAtlas library.

Have fun!